The modern web technologies has brought in plethora of java-script frameworks which helps in building responsive UI. JSF is component MVC, which is a part of standard JEE stack, and an end-to-end webframework by itself with access to multiple JEE components.

The JS+Rest and JSF are matured and they are their best with their scopes.

Comparison Criteria: The below is the elaborate criteria for comparing both the frameworks but there can also be more comparison scenarios based on specific business use cases.

- Popularity and Community support: There are multiple JS libraries/client MVC frameworks like ReactJS,AngualJS which are very popular and have strong community support. JSF implementations like Primefaces also have strong community support.

- Support: Considering the large community support availability both models have equal support possibilities. On weighing individual options JSF based frameworks have better support when compared to Ajax+rest as there are backed by framework founder companies.

- Documentation: The documentation for the framework directly affects the developer productivity. Any matured frameworks like AngualarJS, Primefaces, ReactJS are very well documented.

- License: This directly effects the overall cost of the product throughout its life time. Most of the frameworks are free to use if no commercial support is required.

- Availability of resources: The skill of available resources directly effects the overall project time-line and cost. This is more subjective to kind of organisation, for a java shop company JSF is better suited, where as for front end companies JavaScript+Rest are better suited.

- Learning curve: The skill of available resources directly effects the overall project time-line and cost. JSF is general has higher learning curve when compared to javascript, but for a expert component MVC developer JSF has lesser learning curve than pure front end technologies.

- Productivity: This dependencies on the skill of resources, if a java developer with exposure to component MVC, can use existing tooling and be more productive with JSF. Front end developer will be more productive than JSF developers due to their exposure to stack and lesser tooling required for development and white box tests.

- Page Complexity: Does page complexity demands huge flashy responsive UI of javascript.

Technical Criteria:These criteria effects QOS(Quality of Service) requirements and few other technical criteria of the application.

- Sustainability: Sustainability is an ability to remain productive indefinitely with providing value to stakeholders and ensuring safe world for all the stakeholders. One of the key criteria under sustainability is carbon foot print. This is more to do with web page design than a base framework. Considering mobile first apps with lesser content and faster page loads can be more sustainable. Primefaces JSF 2 framework which is powered by jquery can also be as comptent as JS+rest apps in building relatively similar page loads. In general Ajax+rest is expected to have lesser http requests, but badly designed application can have more ajax requests resulting in detrimental of sustainability.

- Security: Any server side framework like JSF is more secured than pure client side framework. At the same time the badly designed JSF application can be less secure than good designed javascript application.

- Life-cycle complexity: JSF life cycle is more complex when compared to JS frameworks.

- Back-end Integration: JSF is only suited if back-end is java applications. For non java technologies java script or their back end native frameworks are undisputed choices.

- Supported protocols: JSF supports HTTP and Web-Socket(JSF 2.3+) protocol. JavaScript being executed throw browsers so most of the browser supported protocols can be extended for javascript apps.

- Routing options: JSF is multiple page framework where routing can be declared programatically or xml configurations. Client side JS MVC frameworks like angualarjs also provide routing options for SPA.

- Data binding: JSF has a better support for databinding when the entire workflow from web view to database is considered. JS frameworks has data binding only to html view and an additional data binding implementation is needed on server side.

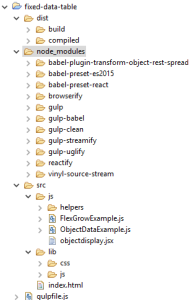

- Project Modularity: We have build pipelines like gulp/grunt available for JS frameworks also which helps in including modularity for JS scripts. But there is always a need of additional modularity model needed for business logic. For JSF applications front-end and back-end being same technology can have standard modular approach.

- White Box Testing Tools: JavaScript has better whitebox test tools as no specific deployment needed. JSF applications need special embedded containers for white box testing.

- Server resource usage: JS applications uses lesser server IO and CPU when compared to JSF applications. If primefaces applications are planned with proper client side validations/converters server CPU usage can be drastically reduced.

- Client resource usage: JS applications uses higher client resources when compared to JSF as more amount of processing is done on client machines.

- Scalability: JS applications are better scalable as they are of stateless in nature when compared JSF applications which are by default stateful.

- Mobile: JSF implementations like Primefaces has built mobile UI support which can be leveraged to build mobile first JSF applications. The JS is better suited for mobile apps because of its intrinsic client side centric language.

- Cloud compatability: JS applications can connect to cloud endpoints. Any JEE applications can be deployed in cloud. JSF 2.X which has path based resource handling and bookmark facility simplifies cloud based resource handling.

- Post-Redirect-Get: Starting from JSF 2.X, there is cleaner way to implement this pattern on server side. Modern client MVC consider ajax workflows and do not cleanly support this pattern, there can also be technical argument where people claim its not needed for ajax workflows.

- Browser memory: This mostly depends on the implementation rather than on framework. JS applications has better fine grained control on profiling and minimising the memory usage. JSF developers need to rely mostly on the used framework vendors like Primefaces to resolve the issues.

- Network usage: Properly designed JS applications uses lesser network when compared to JSF applications where server state need to be maintained.

- DOM rendering: JS libraries like ReactJS has better performance when compared to pure JSF applications.

- Client Side validations: JS frameworks by default provides support for client side validations. JSF frameworks like Primefaces support most of the client side validations bringing JSF very near to JS applications.

- Browser compatibility: JS frameworks as well as JSF implementations support major modern web browsers. The support is only limited to the components provided by the frameworks and any incorrect implementation of basic html elements may result in incorrect display.

- Auto-Complete: Most of the JS frameworks and JSF implementations provide components for Auto-Complete.

- Responsive UI: Most of the modern JS frameworks are built for implementing responsive UI. JSF frameworks like primefaces provided specialized css for responsive UI, but developer need to ensure they are used correctly for yielding better results.

Comparison Criteria:

JSF is better if:

- Back-end is JEE application.

- Its application with max users ranging around 10000 users and do not expect to be scalable into 100’s of 1000’s users.

- A business application where business rules are of more preference than flashy UI.

- Training is imparted to business users for the application usage.

Ajax+Rest JS applications is better if:

- Back-end can be non java application.

- End user is unknown.

- The application need to scale exponentially.